This winter, I had an opportunity to participate in an information research team that had a chance to interview top executives in health care in Massachusetts. This included the CEOs of insurance companies, regulators from the Attorney General’s office, and medical directors of major medical networks and hospitals. The goal of this project was to understand one term “Cost Containment” — what are the drivers for rising health care costs and what can be done to slow the rate of growth.

When someone with taxonomy skills participates in these types of investigations, it is hard not to put those taxonomy skills to work. What did I learn from this process that might be applicable to best practice and to understanding health care cost containment?

1) Start with a simple but important question as a guide for developing deeper knowledge

This group started with the question “What is cost containment?” It is a fairly fundamental question since we in Massachusetts are fortunate to have universal coverage (about 97%) but there is a need to control costs. By asking this fundamental question. the group could collect basic facts from each key player on the same topic to understand how proposed strategies are defined from the point of view of key players who are shaping policy.

2) Get to know the cast of characters

Remember the adage that the key to a baseball game is to know the players and the same applies to understanding a complex issue. We need to who the users are, what brought them to these meetings, It is critical to identify the constituencies in healthcare, all of whom have different goals in any situation. The key actors we indentified were:

- Insurers (also known as Payers)

- Providers (Hospitals, Doctors, Specialists)

- Regulators (government, legislature, attorney general)

- Consumers (includes business owners, patients, local government)

- Purchasing agents (people who buy insurance for large groups — government, business, insurance agents)

3) Understand the power relationships

Some actors have more power and are core to the discussion. Insurers and providers have a closer affinity for example, while consumers, including employees, business and local government entities tend to have less to no power in these relationships. Hospitals and specialists have more power than primary care and behavioral medicine. Understanding these internecine wars within health care is a key analysis for understanding core relationships and who is outlying. The health care debate is in part about how to give outliers more power and equity in the health care process. The most outlying of all voices is patients and consumers. Theoretically, in new models of health care, their voice is supposed to be represented by larger purchasing pools who can negotiate for better service at less cost.

4) Identify the key cost drivers — Isolate the attributes

The hardest part of this work is to isolate the variables/attributes or cost drivers, and understand how each group contributes to improving these practices. These are topics that should be of mutual concern but that are not universally understood and standardized. Examples of cost drivers included:

- Use of and dissemination of best practices (end-of-life care, chronic diseases)

- Use of Technology

- Number and Variety of Insurance Plans

- Cost of drugs

- Reimbursement rates

- Risk Management (use of defensive medicine, malpractice, high-risk pools)

Each of these attributes needed to be further understood from perspective of the key players to understand how it contributes to cost. For example, Massachusetts has an excellent universal health care law, where consumers can choose from about 18 different plans over the Connector, but in addition, there are additional public, private and individual plans resulting in over 16,000 different plans. Some cost containment could be achieved by having a “shared minimal contract” that is at a high standard of care, and captures essence of basic wellness. To do this, the players and consumers need to find the common language for describing conditions and coverage.

5) Capture the AS IS Definitions.

Since these conditions and coverage are not standardized, it is useful to understand what the current status is. Understanding AS IS definitions help to capture the many disconnects between group. For example, while consumers argue about cost of deductibles, insurance companies might spend more money in order to reduce high cost of hospitalization. Result is like a balloon filled with water — one end gets leaner, while more pressure is put on another end of the balloon — the consumer. Capturing the cacophony, instead of the symphony, turned out to be the most valuable part of the work. We discovered we did not have to reach common understanding, which meant trying to capture the current status and its impacts.

6) Read background content

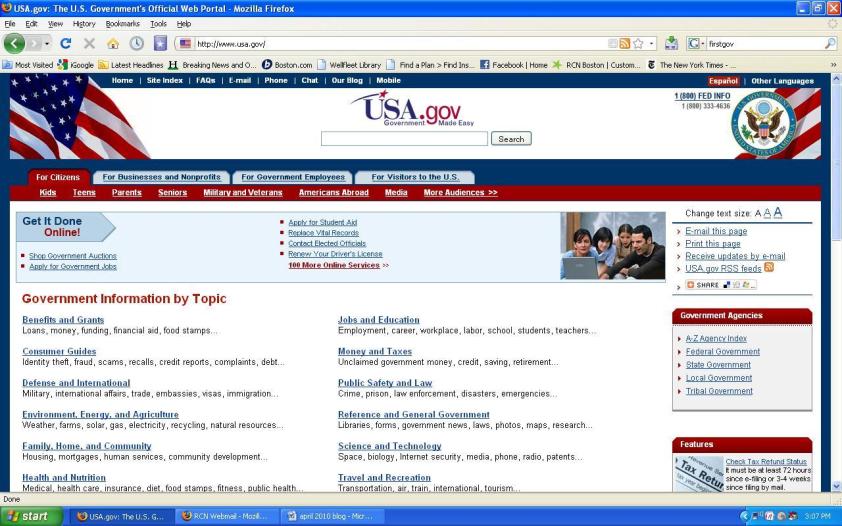

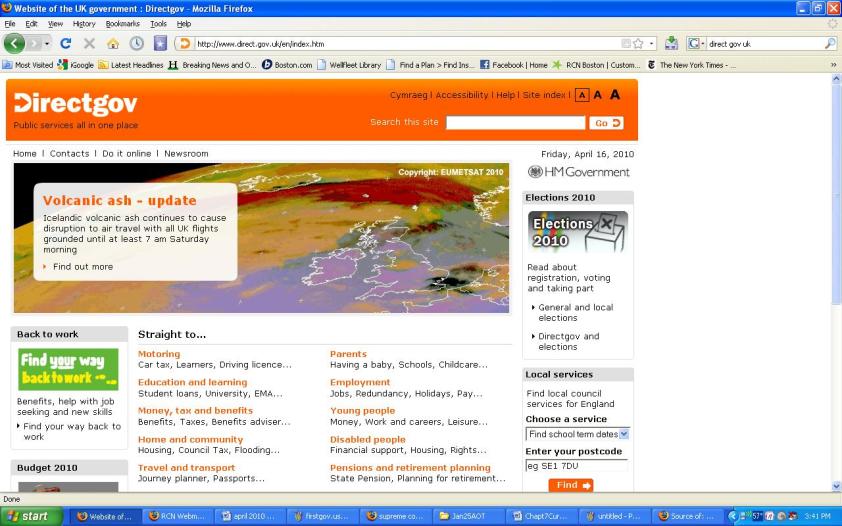

In addition to understand the “cast and drivers” it is also important to read studies and literature to keep a broad and balance perspective. Being in rooms with charming and knowledgeable power players can be quite intoxicating, but to keep it honest, we needed to keep reading and we needed to ask honest questions about what was the advantage for each player in their advocacy for a certain program. Spending a few hours each week on literature reviews, books, articles, podcasts on general health care was very important to building our group and individual knowledge base and developing our facility in the terminology of health care economics. We used reading to define comparative health care models in other countries (Taiwan, Switzerland, Japan, Canada, Germany, UK, France, and US) and to understand multiple models of healthcare delivery.

7) Capture concepts in simple diagrams

Even within our small, random data collection group, there were divisions in understanding can be quite diverse. Using simple diagrams to capture concepts turned out to be powerful shared way to come to common understanding. Bubble mapping, graphing, hierarchical diagrams, any visual graph was useful to clarify information.

8) If any term is hard to explain with a simple sentence, it probably deserves a taxonomy

“Cost containment” is not trivial, but it is also important to understand. And it is almost impossible to explain without learning something about healthcare system. It is worthy of the time and effort to create a taxonomy to define the information space or information void, and a void is filled by misunderstanding or misinformation.

Developing a consumer-focussed taxonomy for navigating health care turns out to be valuable work, but it is hard to sustain without a dedicated team with and sustained funding. A consumer-focused taxonomy would help navigate the health care debate, can be used across all actors, including insurers, providers, governmental entities and consumers who want to share information with a confused but curious public.

~ Marlene Rockmore

![Reblog this post [with Zemanta]](https://i0.wp.com/img.zemanta.com/reblog_e.png)

You must be logged in to post a comment.